Recently, Google introduced an end-to-end neural audio codec—SoundStream. According to Google, SoundStream is the first neural network codec to work on speech and music, while being able to run in real-time on a smartphone CPU. Earlier this year, Google released Lyra, a neural audio codec for low-bitrate speech.

Why Google focuses on low-bitrate audio compression while more and more bandwidth is available today? What’s the main difference between SoundStream and Lyra? Could AI audio codecs only work at low bitrates? What’s Google’s next step?

With these questions, LiveVideoStack talked with Jamieson Brettle (Senior Product Manager at Google) and Jan Skoglund (Staff Software Engineer at Google) via email.

LiveVideoStack: Hi, Jamieson and Jan, congrats on the progress in SoundStream! This is big news in audio&video world and also captures attention of engineers in China. In order to give people a deep insight into this novel neural audio codec, we’d like to ask you a few questions.

Q1: As people today are getting more and more bandwidth, why does Google focus on the low-bitrate audio compression?

Jamieson&Jan: While infrastructure continues to improve, it still takes time for the networks to be completely ubiquitous. Additionally, the demand for bandwidth by users and applications means that even as available bandwidth increases the demand still outpaces supply, so anything that we can do to reduce bandwidth improves the overall user experience.

Q2: What’s the main difference between SoundStream and Lyra, a neural audio codec released this year earlier?

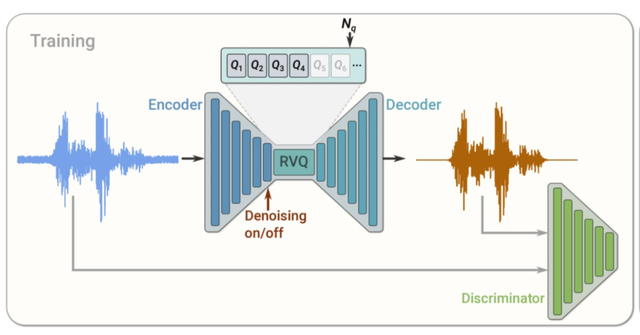

Jamieson&Jan: The first version of Lyra used an internal synthesis engine based on WaveRNN and the new version will be based on SoundStream, which uses a network similar to auto-encoders.

Q3: Why does Google develop two AI codecs——SoundStream and Lyra? Could Google reveal the roadmap of this project? How will SoundStream integrate with Lyra?

Jamieson&Jan: Using ML for audio coding is still in its infancy, so we’re seeing rapid improvements as research in the area ramps up. Having on-going projects allows us to productize research quickly getting the best codecs into real-world applications. Future versions of Lyra will be powered using SoundStream as the underlying engine. This allows existing developers to continue to use the same Lyra APIs, but get a dramatically improved performance.

Q4:According to the paper, SoundStream has gone beyond Lyra in all terms of sound quality (at same bitrates), robustness to signal types (speech, music, clean, noisy), algorithmic latency and computational complexity. Will Lyra be completely replaced?

Jamieson&Jan: Because we’re seeing such great improvements in sound quality, robustness to noise and can handle multiple types of audio, the autoregressive engine in Lyra will be replaced by the new SoundStream engine.

Q5: According to the experimental results of the journal paper, we can see that at 12kbps, the performance of SoundStream seems to reach its limits. Does Google believe AI audio codecs only work for low-bitrate scenarios? Is it possible for AI audio codecs to outperform classical codecs at medium-to-high bitrates (like common AAC bitrate)?

Jamieson&Jan: We think that a range of bitrates and applications can benefit from AI codecs. We are currently working on improving neural-network based audio coding at higher bitrates.

Q6:Can SoundStream encode different sound types (speech, music and mixed signals) at the same time?

Jamieson&Jan: SoundStream does not classify sound types and can handle different sounds at the same time.

Q7: Does a neural codec have an obvious advantage over classical codecs in terms of complexity?

Jamieson&Jan: So far in neural codecs we’ve seen less complexity in the encoding and more complexity in the decoding - typically resulting in much more overall complexity than in codecs like Opus. However, over time we think that there will be a number of ways to make neural coding more efficient through improved hardware support and new algorithmic improvements.

Q8: Will SoundStream be an all-purpose audio codec or focus on the specific area?

Jamieson&Jan: Initially applications will likely be focused on real-time communications, but there’s promise that SoundStream will be useful for general purpose coding.

Q9: As SoundStream will be released as a part of the next, improved version of Lyra, is it possible for this new Lyra to replace Opus one day?

Jamieson&Jan: At least in the short term we see Opus and Lyra coexisting - in fact our team continues to do research and add improvements to Opus.

Q10: What’s Google’s next step in audio compression?

Jamieson&Jan: We’ll continue to explore areas across the applications space for efficiencies in audio compression using both ML and traditional coding methods.